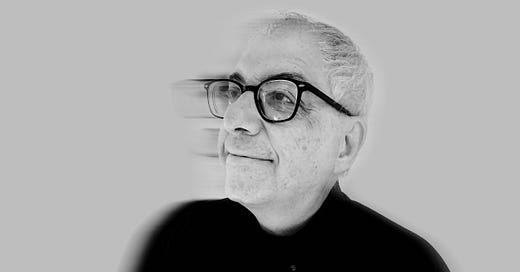

NORTH STAR INTERVIEW: Steven Levy, WIRED Magazine / Editor-at-Large

Steven Levy is a titan in the tech world covering big tech and knowing leaders personally. You’d expect nothing less from WIRED’s Editor-at-Large. He’s written books about Facebook, is big into cryptography, cybersecurity, and privacy and has interviewed everyone from Steve Jobs to Bill Gates. Author of multiple books, he’s not one for bullsh!t and holds power to account in a fair way.

I recently got to ask him about his coverage of OpenAI as he’s been spending a lot of time with Sam (who he’s known for some time now) and the OpenAI team over in San Fransisco. OpenAI is the cover story for WIRED’S October issue (out Sept 19th - look out for the snazzy purple cover) and make sure you read ‘What OpenAI Really Wants’ on WIRED.com.

North Star interviews are always free [archive], but with a paid subscription to C_NCENTRATE you get a whole stack of goodies including a ticket for TBD Conference. Readers say C_NCENTRATE is “the first good decision” they make each week. Subscribe now for £99 a year. Cancel anytime.

You spent a significant chunk of time with Altman over the years and recently during a busy and stressful period. What’s your personal take on the man? Oracle? New wave, cool, less-broy SV bro? Or just a smart guy who knew he was opening Pandora’s box and did it anyway?

I think Sam is one of those people who at an early age locked into the vision of how technology could make a better future, and has a single-minded commitment pursuing that. He’s mature enough to sit through stuff that bores him, all for the mission.

Did the tour do anything real for OpenAI and their strategy or was it all smoke and handshakes since no government out there seems to have a real handle on what AI companies should and shouldn’t be doing.

I think that the tour had a lot of value for OpenAI. While the spotlight was on the company and on Sam, they had the opportunity to cement status as the white hot center of the AI boom. Not only did Sam get to share his views with world leaders, but he connected with hundreds of developers who might write to OpenAI’s API. The press by and large was positive, and the leaders were respectful. It’s BECAUSE governments are grappling with what to do with AI that it’s so valuable for Altman to present his point.

You put a quote in brackets in the piece, I think it’s a really huge issue. Can OpenAI ever be ethical if all the coders and developers want to simply push boundaries without thinking first? “(Though, as any number of conversations in the office café will confirm, the “build AGI” bit of the mission seems to offer up more raw excitement to its researchers than the “make it safe” bit.)”

Well, they are thinking about consequences, and ways to “make it safe.” But the idea of superintelligence gets their blood hot. They do believe it can be done safely, and are putting a lot of effort towards that. But the paradox is that some of them–in OpenAI and other AI shops– think that there’s a non-trivial chance that AGI wipes us out, but have decided unilaterally that it’s worth the risk.

You mention cults/believers in the piece - all standard in Silicon Valley and the startup world…and often have bad endings. What do you see OpenAI doing to ensure this isn’t the case and best outcomes are fostered rather than charging through walls or drinking their own Koolaid?

Beginning with GPT-2 OpenAI was aware that it might not be a good idea to simply release their models into the wild. Each iteration of their LLMs is undergoing more testing and red-teaming. They do believe that part of getting this right isn’t to build perfect guardrails from the get-go, but let many people use it so they can discover the weaknesses, and improve them. But of course there are no guarantees.

Where do you see the current laundry list of legal issues surrounding training and copyright infringement netting out for OpenAI? Are there any forces that could impact this one way or another?

It’s possible that court actions or legislation will dramatically limit the training sets. In that case, look for OpenAI and others to spend a fortune on licensing. Meanwhile, one might expect that open source models and some efforts from overseas will scrape away and use everything for their models.

What didn’t make it into the piece about Altman, the gang or OpenAI that you think is poignant about where the future of OpenAI is heading?

If something great didn’t make it into story, I’ll use it later! [NOTE TO READER: Make sure you subscribe to Steven’s Plaintext newsletter]

At what point do we need to start demanding specifics from Altman and co about AGI and their not really knowing if and when they achieve it? Can anyone right now actually challenge OpenAI save putting guns to heads and saying step away from the keyboard?

I tried to push Sam on this, and put his answers–vague by his own admission–in the story. I keep guns out of it. Seriously, for decades there has been a huge issue in general of how to deal with powerful technologies that might lead to horrible consequences. One thing that ChapGPT has done is to push that conversation to the mainstream. It’s up to all of us to decide whether the balance of risk and reward would lead us to drastic attempts to bottle up AI technology, rather than to use regulation to achieve a super tricky balance between constraint and innovation.

What’s the question you wanted to ask Sam but you were too afraid of the answer?

If I’ve learned anything about journalism it’s to ask those questions.

For more information or to engage Steven, head over to Wired, his X profile or stevenlevy.com